Robotic arm automation -

A current project to fully automate robotic arms to complete abstract manufacturing tasks.

Using machine learning models such as Physical Intelligence Pi zero model and LeRobot to control and manipulate an arm using a VLA (vision language actionizer).

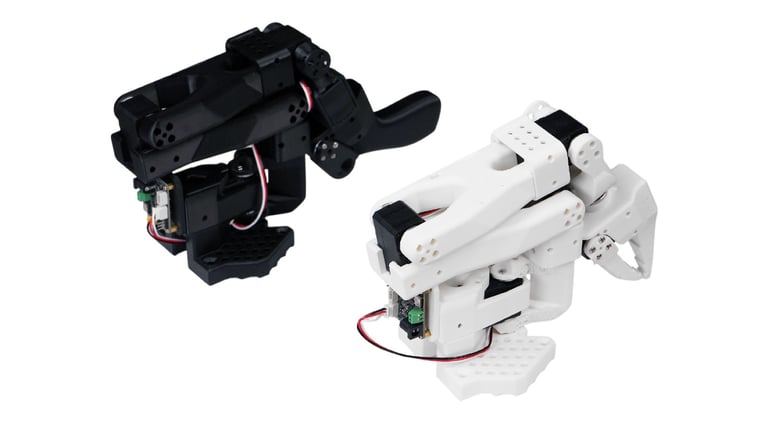

We are still in the process of retrofitting these open source models to work with the three open source robotic arms that I'm working with, specifically the Parol 6 by source robotics, mikobot 1 by mikobot, and a SO-ARM100 pair with leader and follower.

Robotics and AI

A couple of years ago, when I arrived in the UK to start my degree I found myself in need of a project: something I could do in a relatively small space.

Like a lot of people I’d recently started using chat gpt and was curious about its possibilities and future applications, especially in robotics.

As I’d been considering a robotic arm project for a while this seemed like the perfect time to take the leap.

But at the same time I was fully aware, (and a little intimated) by the software/hardware challenges I’d be facing. Up until now the deepest I’d gone in this field was building my trusty Voron printer and setting up its Raspberry Pi controller.

I started researching open-source repositories for robotic arm projects that could be built with off-the shelf components and learning the fundamentals of AI use in robotics; what neural networks are, how they work, and what kind of code is running them.

I made contact with experts in the field, and from my conversations with them created a goal for the project; create a robotic arm capable of interacting with its environment and the real world based on text input.

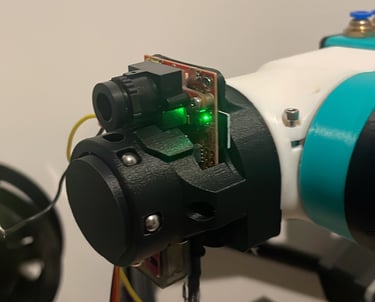

This led us to smaller processing systems, development modules such as the OpenMV camera, which can run small computer vision models to recognize and detect known objects.

For the arm, me and my now assembled team built a Parol 6 robotic arm from scratch (from an existing Github project) and mounted the Open MV camera module on the end of it.

Once installed the camera module could map a given object, relaying the x and y coordinates back to the arm as JSON packages, (we used “april” tags to get the Z coordinates as OpenMV is a mono view camera that cannot detect three dimensions). These JSON packages were sent to an esp32 module which was connected to the arm GUI through WiFi.

Once the hardware and software was running we designed and built a tool changer and created a system to automatically locate the tool changer and swap to a new tool based on predefined april tags.

As I got deeper into this project, learning more about AI and machine vision and what was being done by research organizations such as Physical Intelligence, I began to realize a VLM or vision langage model would be the best way to reach the goals we had set out to achieve.

But to train and deploy an arm using a VLM model would require a lot more processing power than we were currently using.

This led me to another robotic arm design which could be paired with a newly released openpi from Physical Intelligence (an open source VLA, application version of a VLM). This VLA, which when used through lerobot, could generate actions based on datasets in both image and arm coordinates.

This new arm is capable of training and operating with a physical emulator. (A identical robotic arm with all of the same DH parameters)

The emulator is key to easily training the arm to carry out an episode (a training task which demonstrates the process to complete a task to show the arm what to do)

It does this by creating a virtual twin or a simulated emulator to simulate tasks and actions and use that synthetic data to train a VLA model on those tasks.

And while it’s early days with this new arm the current plan is to train and learn a wide variety of tasks and skills specifically in high variance manufacturing applications. And to use these open source models to potentially add more robotic arm options by creating a universal simulated emulator.

Two years on this project, in such a rapidly evolving field, has really opened up a whole new world to me, expanded my interests and possible career path.

LeRobot Projects

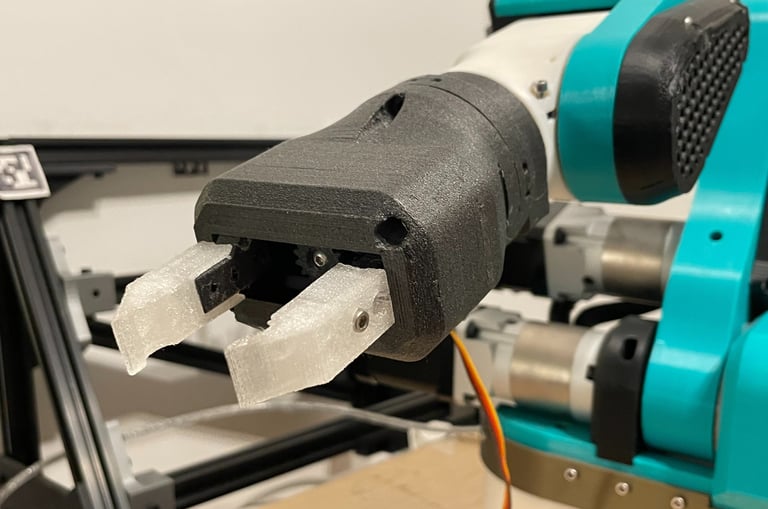

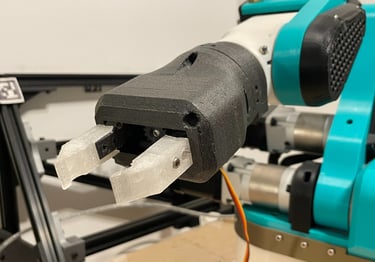

Folding a towel with ACT

The ACT model enables the SO-100 robot to fold towels autonomously—a small task with big implications for automating repetitive work. This isn’t just about chores; it’s about redefining how AI and robotics free humans to focus on creativity and innovation.

Why it matters:

- Automating the mundane: Robots handle tedious tasks (laundry, dishes, etc.), bringing us closer to a "chore-free" reality.

- Blueprint for scaling: This project lays groundwork for broader AI-driven automation in homes and beyond.

- Human-first future: Let robots do the grunt work—we’ll focus on creativity, strategy, and connection.

LeKiwi

lekiwi is another open source project by the folks over at Hugging face Lerobot, it is a mobile base ment to allow robotic arms such as the SO-100 the ability to operate over a much greater area.

Build your own dreams

Or someone else will hire you to build theirs. Here is how you can take action – starting today.

Contact

Connect

2002oweno@gmail.com

+44 7587933294

© 2025. All rights reserved.